Many believe those who procrastinate are lazy or unmotivated. It can be true of some, I suppose. Most, however, are caught in a torturous loop that stems from the brain. Once you fall prey to these endless cycles, it’s difficult to claw your way out.

Many believe those who procrastinate are lazy or unmotivated. It can be true of some, I suppose. Most, however, are caught in a torturous loop that stems from the brain. Once you fall prey to these endless cycles, it’s difficult to claw your way out.

A War Rages Inside the Brain

There are two culprits triggering procrastination.

The prefrontal cortex, responsible for:

- Planning

- Decision-Making

- Abstract Concepts

- Goals

And the limbic system, which regulates:

- Pleasure

- Fear

- Reward

- Arousal

Note how the prefrontal cortex’s job centers around self-control and the limbic system’s responsibilities are all emotional based.

When you have a task to complete, your prefrontal cortex sends a signal to your limbic system that says, “C’mon, it’s time to work.” Because your limbic system is like an unruly teen who seeks only pleasure and avoids pain or discomfort, it often returns a signal that says, “Let’s do something else that feels good right now.”

Procrastination is the war between the two, and we’re caught in the middle. Social media and other online activities have only worsened the problem, resulting in more and more procrastination. Devices like iPhones don’t help by trying to guilt you into increasing your screen time. Don’t fall for it. They do not have your best interests in mind.

Though procrastination may feel good in the moment — the limbic system tricking you into believing your actions are justified — that nagging task lingers in the prefrontal cortex, which leads to guilt, anxiety, and stress. Once you start procrastinating, it’s difficult to stop, because the limbic system rewards you with dopamine, the feel-good hormone.

Those stuck in this torturous loop know they should work on that project, but their mind is in turmoil. Add in real-life stressors, and procrastination worsens.

Yes, I speak from experience. After leaving my husband of twenty-seven years, starting a new life in a new area, moving again to another new area, where I bought my home, I had plenty of reasons to justify procrastination. Thankfully, I also took a year-long break from social media, which helped maintain my inner peace.

For those of us who didn’t grow up with the internet, the “noise” can be downright deafening at times. I also had to learn how to do “guy jobs.” Please don’t jump all over me for that comment. I know it’s sexist, but I never mowed a lawn or used a snow blower before. New England’s constant snowstorms and blizzards this year has forced me to use muscles I didn’t know I possessed. 🙂 There’s an art to snow blowing — it’s become another creative outlet for me, only with aches and pain afterward. LOL

The most important thing that saved me from endless procrastination was my longtime belief in mindfulness, the practice and awareness of living in the moment.

How To Cure Procrastination

Step #1: Realize what’s happening in your brain.

I solved that for you today, but feel free to study more about this war inside you. Fascinating research.

Step #2: Practice mindfulness.

An easy way to begin the practice of mindfulness is to walk outside. Stop. Close your eyes. Take a few deep breaths, the benefits of which we’ve discussed before.

What do you hear? Birdsong? Pinpoint where without opening your eyes. Is there a pattern to his song, or is he communicating with another?

For weeks, I listened to this tiny wood thrush who nests on my covered porch. Amazing little birds that can easily sing over fifty unique songs and can even sing two different melodies at once. I thought he was singing just to sing, until I noticed him stop to listen. Sure enough, another wood thrush sang back.

My breath halted. Since males try to out-sing each other, this must be a singing competition.

I was so invested in rooting for my little porch buddy, nothing else mattered in those precious moments.

What do you smell? The sticky sap of a pine tree? Smoke from a campfire or woodstove? Pinpoint where without opening your eyes.

What do you feel? Focus your awareness on your skin. Is the wind cool against your cheek? Does the sun warm your scalp?

What do you sense? You most certainly are not alone. Wildlife surrounds you, even in the city. Stand in the moment and engage all your senses, except sight. By taking away the ability to see, you must rely on your other senses.

When you’re done, take three steps forward. Start over. There’s one catch — you cannot list anything you already mentioned. This will force you to dig deeper, concentrate harder, your awareness opening like rose petals. Repeat at least three or four times. With each step forward, you’re healing your mind, body, and spirit.

Mindfulness is an important life skill to master.

Besides being a cure for procrastination, mindfulness has many health benefits:

- Reduces stress

- Reduces anxiety

- Fights depression

- Improves focus and memory

- Lowers blood pressure

- Boosts immunity

- Improves sleep

- Manages chronic pain and illness

Step #3: Work on the project you’ve been avoiding for five minutes. Your limbic system will reward you with a dopamine hit — good job! You did it! If you struggle to continue past five minutes, that’s fine. Stop there. Do this every day. Soon, you’ll be so invested in the project, five minutes will turn into fifteen, thirty, one hour, or more.

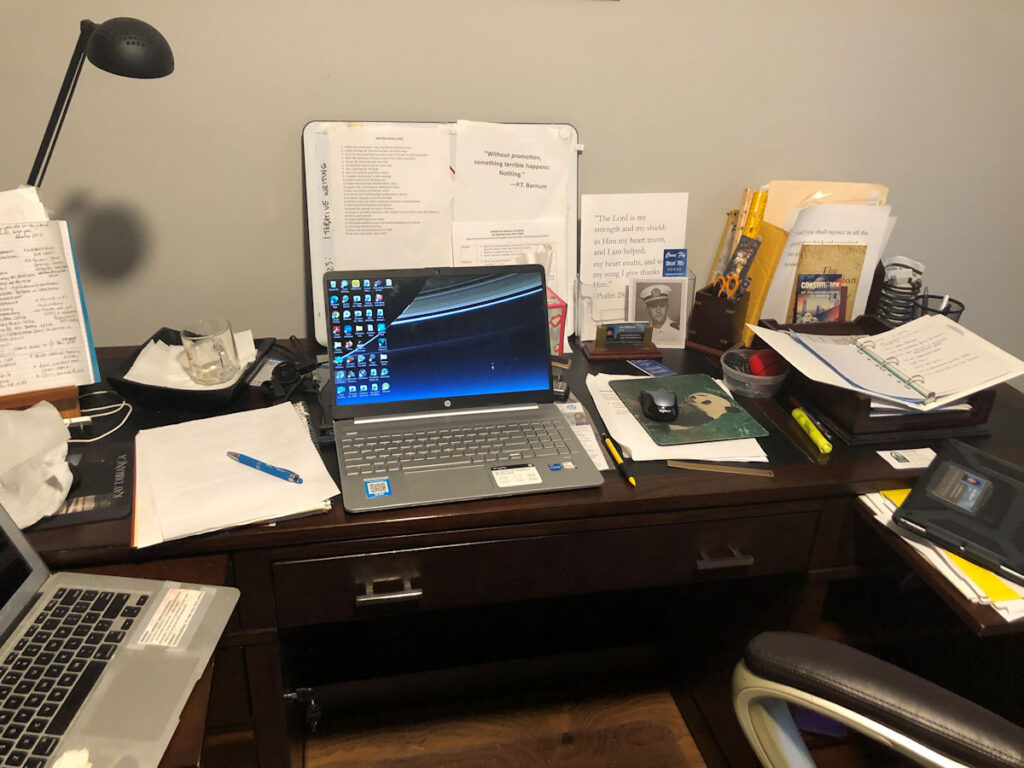

Though writers are not immune to procrastination — some say, we’re the poster children for it — it does help to have a regular writing routine. Walking into an office or sliding on headphones sends a silent signal to the brain that it’s time to work, but that doesn’t mean the limbic system won’t respond with, “Let’s play instead.”

The next time you find yourself scrolling on social media instead of completing a task, take a moment to ask yourself why. Are you procrastinating or do you need a break? If it’s the latter, enjoy. Mindless fun is important, too. If it’s the former, put down the phone and walk outside. Please don’t tell me it’s too cold. I’ve been out there in double negative degree temps and survived just fine. Bundle up. It’s worth the effort. What you’ll experience is the cure for what ails you.

If, for health or mobility issues, you are unable to go outside, use the body scan method to practice mindfulness. Lie Lay Recline in a comfortable position with your eyes closed. Deep breathe for a few rounds. Then focus on your feet. Note how your heels touch the surface below them. Do your toes tingle? If you concentrate long enough, you’ll feel blood flowing through your feet.

Next, take note of your ankles. Little by little, work your way up your body. When you reach each organ, envision how it works inside your body. Once you reach your scalp, you may open your eyes.

The body scan method also works for insomnia.

What do you think about this war inside your brain?

True confession time. I have a horrible nervous habit — reaction? — when someone falls. I’ve struggled with it my entire life, but try as I might, I can’t change my behavior. Believe me, I’ve tried.

True confession time. I have a horrible nervous habit — reaction? — when someone falls. I’ve struggled with it my entire life, but try as I might, I can’t change my behavior. Believe me, I’ve tried.

Hope you enjoy them!

Hope you enjoy them! The modern Thanksgiving holiday is based off a three-day festival shared by the Pilgrims and the Wampanoag tribe at Plymouth Colony, Massachusetts, in 1621. The feast celebrated the colonists’ first successful harvest in the New World. While modern Thanksgiving always lands on the fourth Thursday in November, the original feast happened earlier in fall, closer to harvest time in mid-October, when Canadians celebrate. And no one ate turkey.

The modern Thanksgiving holiday is based off a three-day festival shared by the Pilgrims and the Wampanoag tribe at Plymouth Colony, Massachusetts, in 1621. The feast celebrated the colonists’ first successful harvest in the New World. While modern Thanksgiving always lands on the fourth Thursday in November, the original feast happened earlier in fall, closer to harvest time in mid-October, when Canadians celebrate. And no one ate turkey. During the Ottoman Empire, guinea fowl were exported from East Africa via Turkey to Europe. Europeans called the birds “turkey-cocks” or “turkey-hens” due to the trade route. So, when Europeans first sailed to North America and discovered birds that looked like guinea fowl, they called them turkeys. To be clear, turkeys and guinea fowl are two different animals.

During the Ottoman Empire, guinea fowl were exported from East Africa via Turkey to Europe. Europeans called the birds “turkey-cocks” or “turkey-hens” due to the trade route. So, when Europeans first sailed to North America and discovered birds that looked like guinea fowl, they called them turkeys. To be clear, turkeys and guinea fowl are two different animals. Native Americans have used cranberries to treat wounds and dye arrows. Much like holly, dried cranberries also adorn table centerpieces, wreaths, and garlands.

Native Americans have used cranberries to treat wounds and dye arrows. Much like holly, dried cranberries also adorn table centerpieces, wreaths, and garlands. Tisquantum, also known as Squanto, was a Native American from the Patuxet tribe, who was a key figure to the Pilgrims during their first winter in the New World. He acted as both an interpreter and guide as Pilgrims learned to adjust to their new way of life at Plymouth.

Tisquantum, also known as Squanto, was a Native American from the Patuxet tribe, who was a key figure to the Pilgrims during their first winter in the New World. He acted as both an interpreter and guide as Pilgrims learned to adjust to their new way of life at Plymouth.

Two weeks ago, I reviewed the 35th Annual Flathead River Writers Conference in Kalispell, Montana. If you missed that, here’s the

Two weeks ago, I reviewed the 35th Annual Flathead River Writers Conference in Kalispell, Montana. If you missed that, here’s the

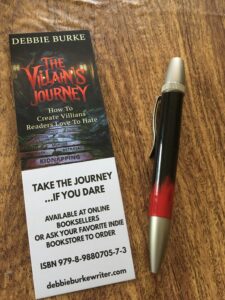

A writing conference is a great chance to build a mailing list. I took the opportunity to encourage sign-ups for my list with a prize drawing—a hand-crafted wood pen inspired by my book,

A writing conference is a great chance to build a mailing list. I took the opportunity to encourage sign-ups for my list with a prize drawing—a hand-crafted wood pen inspired by my book,