Amazon’s Latest Rollout – And Controversy

Terry Odell

Amazon is rolling out a new feature, “Ask this Book,” a new feature that allows readers (of Kindle books) to interact with the book. It’s currently available for thousands of English-language books on the Kindle iOS app in the U.S. The feature will be enabled on Kindle devices and Android OS next year.

Forget a character’s name? Can’t remember where a scene took place? Instead of searching, which can be a tedious process, you can now ask the AI genie inside the book and it will answer you, also inside the book. No scrolling, no losing your place.

That seems harmless enough. Helpful, even. But you can also ask more general questions, and AI will answer in a paragraph, and that’s where the controversy begins.

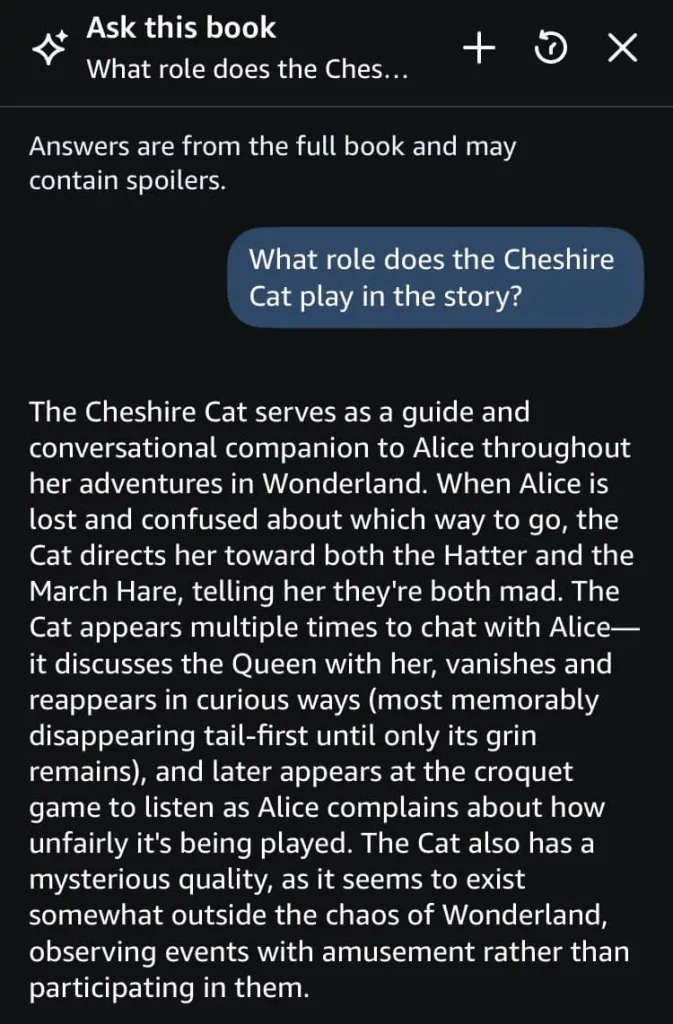

This example is from a Kindlepreneur article written by Kevin J. Duncan, Head of Content. Using the book “Alice in Wonderland,” he asked what was the role of the Cheshire Cat.

The response:

The argument continues that these sorts of answers are the “opinions” of AI. To quote Duncan, “the system is giving you its version of what that thing means. It decides what matters, what doesn’t, what’s central, and what can be glossed over.”

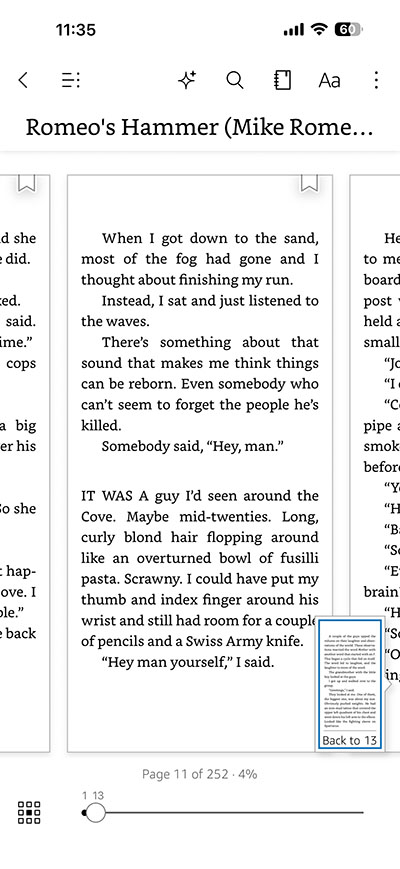

My own test. I don’t own a Kindle, and I buy almost all my books from Barnes & Noble, but I do have a few books from Amazon, admittedly. Most of them are the freebies that come with my Prime membership, with occasional purchases from authors I’m familiar with. I didn’t have access to the Ask This Book feature when I opened a book from my Kindle library to read on my PC, but I did get the feature on my phone.

(Personal note. Reading on my phone is a last resort. I have a Nook tablet and an iPad mini, both of which are much more eye-friendly, but sometimes I’m stuck waiting unexpectedly and don’t have one of those devices with me.)

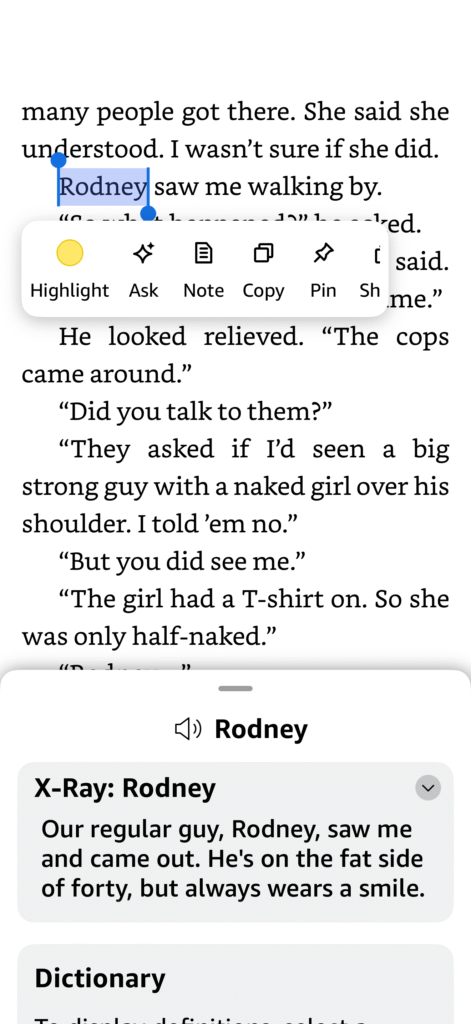

The Ask This Book feature is activated by tapping the page and getting a menu of icons at top of the screen. Ask This Book is the diamond shape with the little +.

Or, you can highlight a word or portion of text, which should give you the option to ask your question.

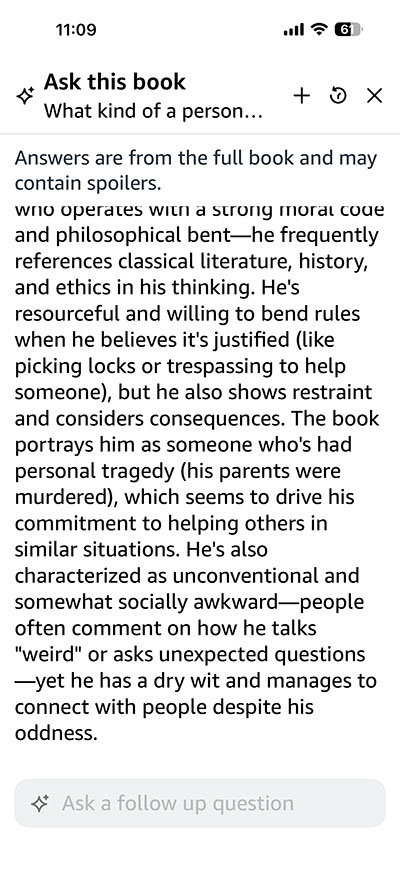

You can also choose between having AI look at the whole book, or only up to as far as you’ve read, which is supposed to avoid spoilers. I used the whole book option and asked the question, “What kind of person is Mike Romeo.”

This is the response I got. (Sorry, but my phone wouldn’t let me shrink the text to get the entire answer on the screen, but you can probably figure out the first sentence.)

**If you’re reading this, JSB, what do you think about this characterization summary?

The Author’s Guild is pushing back. This is what they had to say:

“The Guild is looking into whether the feature, which was added without permission from publishers or authors, might infringe authors’ and publishers’ rights.

“Ask this Book, which is slated for a wider rollout in 2026, allows readers to query an AI chatbot about books they have purchased or borrowed. So far there is no way for publishers or authors to opt their books out of the feature, though as of this writing the feature is not available for all ebooks. It allows a reader to highlight text and click on an “Ask” icon to ask the AI to “explain” the selected text or enter their own question in the chatbot. All responses are generated from the book itself.

“The Guild is concerned that Ask this Book turns books into searchable, interactive products akin to enhanced ebooks or annotated editions—a new format for which rights should be specifically negotiated—and, given Amazon’s stronghold on ebook retail, it could usurp the burgeoning licensing market for interactive AI-enabled ebooks and audiobooks.”

Writer Beware isn’t too happy about the feature, either. They say, “Agents and publishers broadly regard anything to do with generative AI as a separate right reserved solely to the author, and publishing contracts are increasingly addressing this issue. The primary focus has been on preventing unpermissioned AI training, but with the technology embedding itself at warp speed in all aspects of the book business, the rights implications are expanding just as fast…especially where, as here, they sneak in under the radar.”

Should this be considered yet another format of a book? If so, what are the author’s rights?

As of now, there is no opt-out choice. Ask This Book is included automatically. It operates independently of the author, so they don’t get to review answers, suggest changes, or flag problems.

Your thoughts, TKZers? Are authors and publishers getting shortchanged?

**Note: if you’re upset with Amazon, my books are available wide.

New! Find me at Substack with Writings and Wanderings

Deadly Ambitions

Peace in Mapleton doesn’t last. Police Chief Gordon Hepler is already juggling a bitter ex-mayoral candidate who refuses to accept election results and a new council member determined to cut police department’s funding.

Peace in Mapleton doesn’t last. Police Chief Gordon Hepler is already juggling a bitter ex-mayoral candidate who refuses to accept election results and a new council member determined to cut police department’s funding.

Meanwhile, Angie’s long-delayed diner remodel uncovers an old journal, sparking her curiosity about the girl who wrote it. But as she digs for answers, is she uncovering more than she bargained for?

Now, Gordon must untangle political maneuvering, personal grudges, and hidden agendas before danger closes in on the people he loves most.

Deadly Ambitions delivers small-town intrigue, political tension, and page-turning suspense rooted in both history and today’s ambitions.

Terry Odell is an award-winning author of Mystery and Romantic Suspense, although she prefers to think of them all as “Mysteries with Relationships.”

Terry Odell is an award-winning author of Mystery and Romantic Suspense, although she prefers to think of them all as “Mysteries with Relationships.”

Con artists, scammers, and fraudsters are among bad guys and bad gals featured in

Con artists, scammers, and fraudsters are among bad guys and bad gals featured in  Authors are not the only ones under threat. Human artists face competition from AI. Just for fun, check out this lovely, touching image created by ChatGPT. Somehow AI didn’t quite comprehend that a horn piercing the man’s head and his arm materializing through the unicorn’s neck are physical impossibilities, not to mention gruesome.

Authors are not the only ones under threat. Human artists face competition from AI. Just for fun, check out this lovely, touching image created by ChatGPT. Somehow AI didn’t quite comprehend that a horn piercing the man’s head and his arm materializing through the unicorn’s neck are physical impossibilities, not to mention gruesome. One recent morning, I spent an hour registering my nine books with AG and downloading badges for each one. Here’s the certification for my latest thriller,

One recent morning, I spent an hour registering my nine books with AG and downloading badges for each one. Here’s the certification for my latest thriller,  Then AG generates individually-numbered certification badges you download for marketing purposes. At this point, it’s an honor system with AG taking the author’s word.

Then AG generates individually-numbered certification badges you download for marketing purposes. At this point, it’s an honor system with AG taking the author’s word. In 2023, I wrote

In 2023, I wrote

Here’s what Amazon’s AI says about

Here’s what Amazon’s AI says about

Recently Draft2Digital (D2D) did a survey among authors, publishers, and others to determine how they felt about the use of AI and what authors would consider fair compensation for use of their work. D2D CEO Kris Austin kindly gave permission to quote from the survey results (full results at this

Recently Draft2Digital (D2D) did a survey among authors, publishers, and others to determine how they felt about the use of AI and what authors would consider fair compensation for use of their work. D2D CEO Kris Austin kindly gave permission to quote from the survey results (full results at this

Can you believe we’re into November already? Why does time feel like a toboggan on

Can you believe we’re into November already? Why does time feel like a toboggan on  Way back in 2012

Way back in 2012