by James Scott Bell

@jamesscottbell

“Everybody talks about the weather,” Twain wrote, “but nobody does anything about it.”

Yes, and everybody talks about Artificial Intelligence, and nobody can do anything about it. It’s here, it’s there, it’s everywhere. It’s Skynet, it’s HAL, and soon it may be telling you, “I’m sorry Dave, I can’t do that.”

Today I won’t revisit the pros and cons, complaints and commendations, misgivings and infatuations writers have with AI. Rather, I refer to a recent report from Microsoft on the professions most and least susceptible to disruption from generative AI.

Writers, we made #5!

- Interpreters and Translators

- Historians

- Passenger Attendants

- Sales Representatives of Services

- Writers and Authors

The professions least likely to be impacted are manual jobs like phlebotomists (people who draw your blood), highway maintenance workers, plumbers, massage therapists, roofers, and embalmers (stiff competition for this job).

First question is: what the heck’s the difference between writers and authors? It’s subtle.

A writer writes stuff (you’re welcome). But they may not own the stuff. A writer can be someone who produces content for someone else, a writer-for-hire, e.g., a ghostwriter. Clearly, AI is replacing them.

An author owns the stuff (and can therefore license it), and AI is replicating them. The big issue for us fiction writers is whether AI can produce more than soulless trope rearrangement. And whether authors who’ve spent years learning the craft and developing a singular voice can compete with AI in the marketplace.

This is not to say that authors should avoid all things AI—things like copywriting, book descriptions, marketing materials. For these AI is good and fast, freeing up time for writing more fiction and playing Connections. It’s free, too. Pro copywriters are out of a job. Trad publishing doesn’t use them anymore, not to mention any other business that produces sales copy—which is every business.

Series writers can upload pdfs of their books to Google Notebook, press enter, and boom—series bible. Need a recall all the plots in in your 15-book series? Ask your notebook for summaries, and there they are. Need to recall how recurring characters were described in every book in which they appear? Presto. Those are all good uses of the tech.

There’s a dark side, of course A big new scam is targeting authors via AI-generated phishing emails. These are slick (gone are the good old days of scam emails from Nigerian princes rife with shoddy grammar). They purport to be a from an actual person who works for an actual marketing firm. This person just loves your book and wants to help you reach more readers!

There’s a dark side, of course A big new scam is targeting authors via AI-generated phishing emails. These are slick (gone are the good old days of scam emails from Nigerian princes rife with shoddy grammar). They purport to be a from an actual person who works for an actual marketing firm. This person just loves your book and wants to help you reach more readers!

What they’re doing is scraping info about you from the net and using high-praise buzzwords to give you a dopamine hit.

I got one of these just the other day. It begins by saying she (a female name) recently “came across your book” (one dead giveaway is when it doesn’t give you the title. But other emails do). She was “truly struck” by the “raw emotion and depth of storytelling.” And I “deserve” to have my book reach a wider audience. Dopamine!

The email goes on to promise higher book rankings on Amazon and a “customized campaign” to increase exposure across “key global markets.” She has “just worked with an author in a similar genre” who experienced a measurable increase in sales (but doesn’t tell us who the author is). She invites me to receive a “complimentary review” of my current Amazon presence and “explore” how the company can help me out. The email signs off with Warm regards, followed by the name…but no link to a website (which, of course, does not exist).

I laughed then trashed it. I should have labeled it “spam,” for two days later I got a follow up, hoping that I and my family “are doing well” (that’s so nice!) and understanding that “life can get busy” and reminding me “I have a specific idea for a campaign that I’m confident could get your book in front of a huge number of new readers who are actively looking for exactly this kind of story…I’d love to share the details with you in a quick 10-minute chat or call this week. No pressure at all, just a conversation to see if it’s a good fit.”

The ultimate goal of this “good fit” is to get my money and access to my KDP account. What could possibly go wrong with that? (You can read about this scam at the invaluable Writer Beware website.)

This con feeds off our bottom-line desire—we all want new readers. Well, the anecdotal evidence suggests that many readers sense when a book is AI-generated (and consider it “cheating”) versus having a unique voice and style, which only comes via the hard work of learning the craft, writing, getting feedback, and writing, writing, writing.

Yeah, we have to concede that AI is getting better at plagiarizing generating competent commercial fiction that can provide a quick escape. But will it create a rabid fan? I don’t think so. Only blood can do that.

So does your book really deserve to reach a larger audience? Not if AI writes it for you. Do the work. Be the author. Bleed. Get better. And if you need a side hustle, learn embalming.

Comments welcome.

Authors are not the only ones under threat. Human artists face competition from AI. Just for fun, check out this lovely, touching image created by ChatGPT. Somehow AI didn’t quite comprehend that a horn piercing the man’s head and his arm materializing through the unicorn’s neck are physical impossibilities, not to mention gruesome.

Authors are not the only ones under threat. Human artists face competition from AI. Just for fun, check out this lovely, touching image created by ChatGPT. Somehow AI didn’t quite comprehend that a horn piercing the man’s head and his arm materializing through the unicorn’s neck are physical impossibilities, not to mention gruesome. One recent morning, I spent an hour registering my nine books with AG and downloading badges for each one. Here’s the certification for my latest thriller,

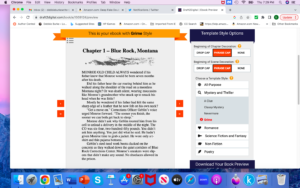

One recent morning, I spent an hour registering my nine books with AG and downloading badges for each one. Here’s the certification for my latest thriller,  Then AG generates individually-numbered certification badges you download for marketing purposes. At this point, it’s an honor system with AG taking the author’s word.

Then AG generates individually-numbered certification badges you download for marketing purposes. At this point, it’s an honor system with AG taking the author’s word. In 2023, I wrote

In 2023, I wrote

Here’s what Amazon’s AI says about

Here’s what Amazon’s AI says about

Recently Draft2Digital (D2D) did a survey among authors, publishers, and others to determine how they felt about the use of AI and what authors would consider fair compensation for use of their work. D2D CEO Kris Austin kindly gave permission to quote from the survey results (full results at this

Recently Draft2Digital (D2D) did a survey among authors, publishers, and others to determine how they felt about the use of AI and what authors would consider fair compensation for use of their work. D2D CEO Kris Austin kindly gave permission to quote from the survey results (full results at this